Electrical engineers at UNL have written the world’s top-ranked algorithm for a 3-D imaging process poised to enhance robotic surgery, navigate driverless cars and assist rescue operations.

In October, the UNL algorithm earned the best overall score to date on the Middlebury Stereo Benchmark, a widely accepted measure of algorithmic accuracy and speed.

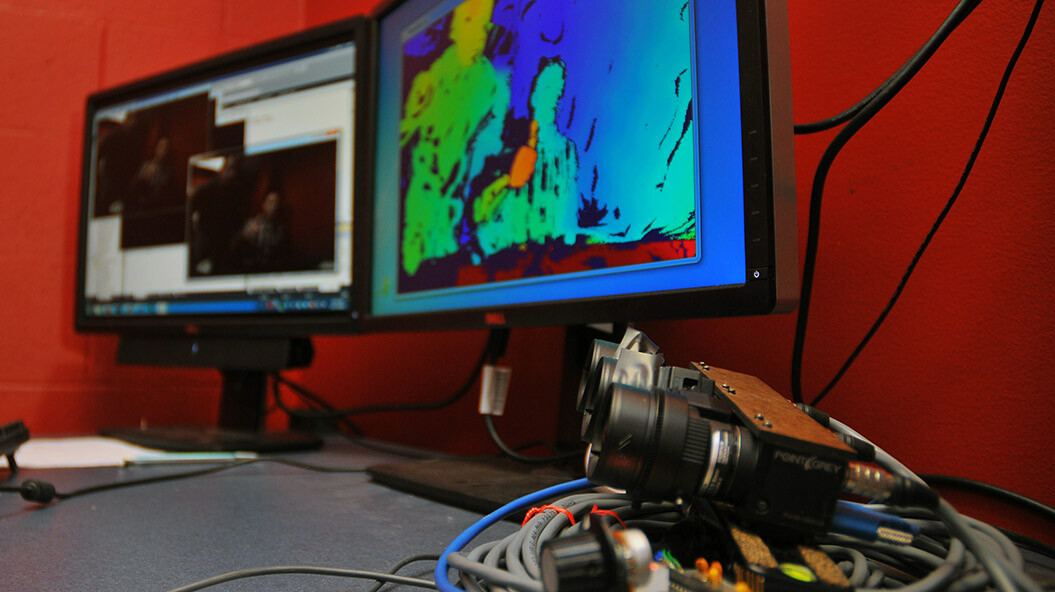

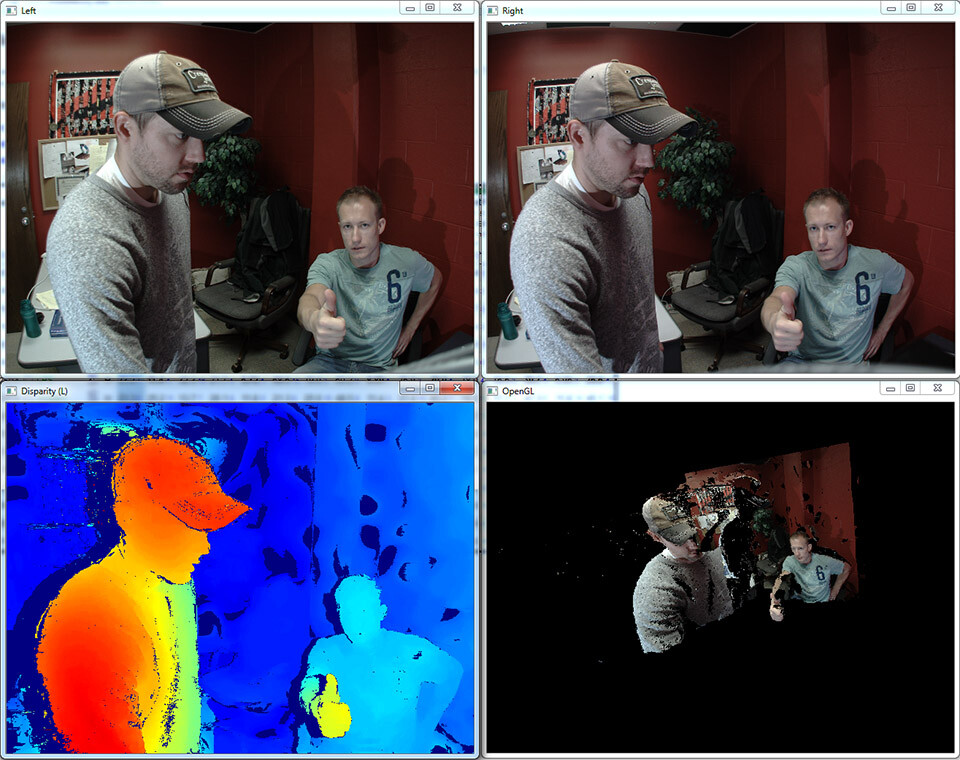

The algorithm directs a process called stereo matching, which allows computers to mimic the depth perception of human eyesight by using images from two video cameras to construct a three-dimensional equivalent.

“The amount of data being processed by our brains is actually kind of remarkable,” said Eric Psota, a research assistant professor of electrical engineering who co-wrote the algorithm with doctoral candidate Jędrzej Kowalczuk. “The problem of stereo matching in the digital age is: How do you get a computer to do what human beings do?”

The human visual system employs multiple methods to provide a sense of three-dimensional space, Psota said. These methods include convergence – the ability of both eyes to focus on the same object – a principle that also forms the core of stereo matching.

“The amount of convergence that’s required for our eyes is proportional to the depth of the thing that we’re looking at,” Psota said. “If you’re looking at the moon, there’s really no convergence of the eyes … whereas if you’re looking at your finger, your eyes converge a great deal. Determining the amount of convergence and calculating the corresponding depth from that is the overall goal of stereo matching.”

Stereo matching emulates eyesight, Psota said, by calculating the position of a pixel in the left camera relative to its location in the right. An algorithm’s ability to map the distance between respective pixels from each camera dictates how well it captures the dimensionality of a given scene.

The Middlebury Stereo Benchmark tasks an algorithm with matching multiple high-resolution images under various conditions, then calculates a weighted score by averaging its performance across 15 image pairs. The benchmark ranked UNL’s algorithm ahead of those from researchers across North America, Asia and Europe, many of which were also submitted in 2014.

The team’s stereo-matching algorithm uses parallel processing to independently yet simultaneously compute the depth of individual pixels, Psota said, which contributes to its efficiency. The algorithm can also use this data to make probabilistic inferences about the depth of more ambiguous areas, such as blank white walls in the background of an image.

“We establish an initial set of matches, and then we try to share this information among pixels to refine the initial estimate of depth in the following iterations,” said Kowalczuk, whose dissertation centers on the algorithm. “In every iteration, we’re able to isolate very reliable matches for which we can say, with high confidence, ‘This must be the true match for a given pixel.’ This is the information we pass along to the pixels we deem less reliable.”

Though iterative methods typically perform more slowly than their counterparts, the UNL algorithm actually represents one of the few capable of generating imagery in real time, according to Psota. This greatly broadens the range of applications for which it might be used, he said.

Applications galore

The team has already collaborated with mechanical engineers at UNL to advance the design of surgical robots that can be inserted into the abdomen – or even swallowed – before opening up to perform surgery inside the body. As a result, Psota said, surgeons can use stereo matching to precisely manipulate the robotics in a field where every millimeter counts.

“We’ve even taken that a step further,” Psota said. “In certain operations – making an incision or stitch, for instance – it’s an advantage if the robot can interact more autonomously. To do that, it needs to understand what it’s looking at. It’s impossible to make a three-dimensional incision without perceiving that third dimension. That’s what got us started on the stereo-matching problem.”

Psota and his colleagues have also applied their algorithm toward stereo cameras mounted on miniature quadrotor helicopters, whose size and maneuverability make them ideal for reaching otherwise inaccessible areas.

“This has applications in search-and-rescue,” Psota said. “If there’s an earthquake or flood, you could deploy this in the disaster area. The idea is to use the stereo images to reconstruct everything in the environment. So it can map while it’s flying, but it can also navigate, which might be even more important.”

The team further cited stereo matching’s use in vehicular safety systems and self-driving cars such as those being developed by Google, which has also employed it to update Google Earth. The process could also eventually help guide the visually impaired, according to Psota, who said the technology’s uses extend as far as the imaginations of those who work with it.

“There are many other potential applications,” Psota said. “It’s not difficult to think of all sorts of ways that you could use this.”