Anyone who’s ever asked a question by email or text can attest to the fact that the way the question is asked — and which answers are offered as options — can sometimes matter as much as the question itself.

How much does Kristen Olson agree or disagree with the previous statement?

◯ Strongly Agree

◯ Agree

◯ Neither Agree nor Disagree

◯ Disagree

◯ Strongly Disagree

Or, put another way:

How well does the previous statement describe Kristen Olson’s thinking?

◯ Completely

◯ Mostly

◯ Somewhat

◯ A Little Bit

◯ Not at All

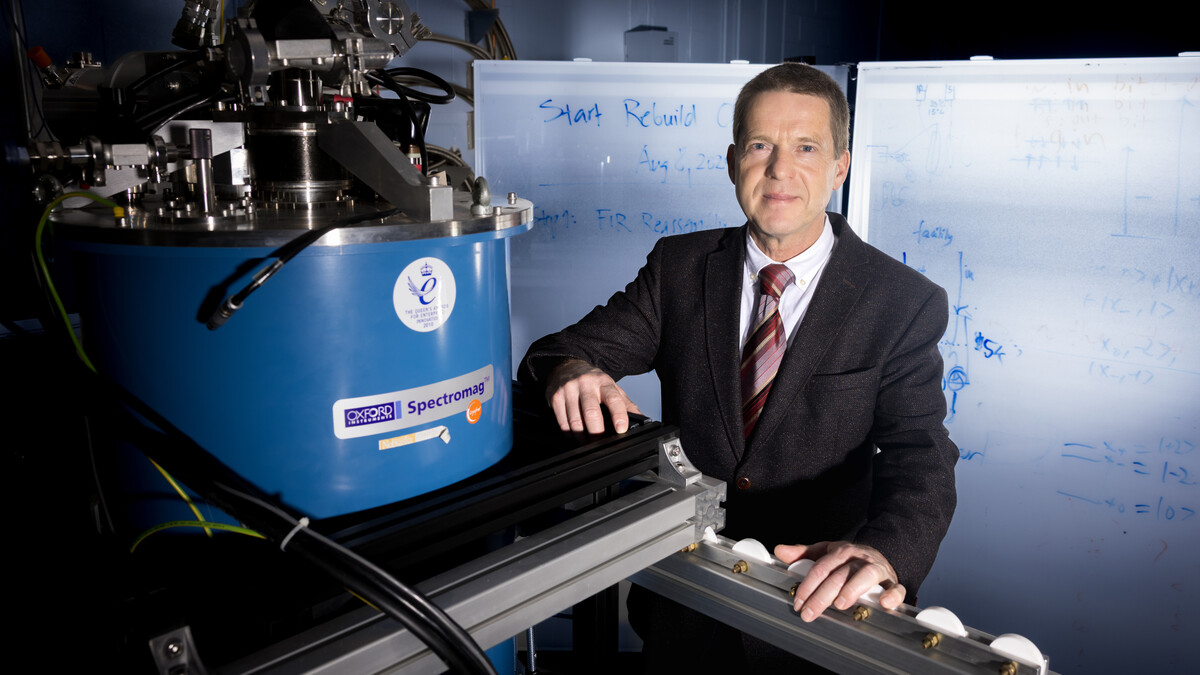

As a survey methodologist at the University of Nebraska–Lincoln, Olson is in the science of questioning questions: which ones to ask, sure, but also how to formulate those questions and their answers for the sake of drawing out what someone actually perceives and believes.

By that measure, Olson and two colleagues recently found evidence that the second of the two formats above — a self-description scale — can outperform the much more common agree/disagree scale.

The agree/disagree scale rose to prominence for multiple reasons, some practical and some self-reinforcing. It’s versatile, lending itself to measuring an array of ideas and subjects. It’s efficient, allowing researchers to save space, which has traditionally been at a premium in mail surveys, and attention, maybe the scarcest resource of all in the era of web surveys. As much as anything, though, it’s popular, meaning that researchers looking to consistently collect data across multiple years will often turn to the agree/disagree scale almost by default.

But it’s not without its problems. For one, research suggests that it’s prone to acquiescence bias: the tendency for roughly 10% of respondents to become yea-sayers who disproportionately choose positive answers or simply agree with a statement, especially when it’s a socially desirable one.

“It’s really easy to just say you agree with something,” Olson said. “It doesn’t require as much thought; it doesn’t require you to engage as much with a topic. So agree/disagree response options have this massive problem, because ‘Agree’ is literally one of the responses.”

Its bipolar nature — the presence of a theoretically neutral, central point, “Neither Agree nor Disagree,” on which the two opposing sides of the scale balance — can also impair its reliability. In some cases, a respondent might be liable to answer the same question differently when presented with it later, even if their opinion remains unchanged.

“Respondents have a really hard job when they’re answering survey questions, especially with these bipolar scales,” Olson said. “Do they (reside) more on the agree side or more on the disagree side? And if they feel kind of mixed about it, which side wins out?

“You might agree that the University of Nebraska–Lincoln is the greatest university in the world, but you might also think, ‘That football team needs a little bit of work.’ Well, which one is the most salient (in the moment)?”

So when Olson and her colleagues collaborated with researchers in the Department of Sociology to administer the National Health, Wellbeing and Perspectives Study survey, they took a beat to consider the wisdom and conventions of the conventional wisdom. As usual, precedence weighed heavily in favor of the agree/disagree scale, which some portions of the survey have always employed. Still, the methodologists were curious about the potential advantages and drawbacks of pivoting to the self-description scale. They dove into the research literature for guidance — and discovered, with some wonder, that there really wasn’t any.

“There was no literature we could find on anybody who had ever compared the self-description scales to agree/disagree scales, even though we use them all the time, in all sorts of studies,” Olson said. “So we thought, ‘Well, this seems like an obvious thing to actually test.’”

Agree to disagree

That “we” included Jolene Smyth, professor and chair of sociology at Nebraska, and recent doctoral graduate Jerry Timbrook, now at RTI International and lead author of the trio’s study in the International Journal of Market Research.

Their analyses generally supported their expectations, and the value of the self-description scale. On half of the questions with a positive bent — “I consider myself a leader,” for instance, or “Helping my community is important to me” — the average score was measurably lower via the self-description scale than via the agree/disagree scale. On that same set of questions, the self-description scale yielded more varied responses. It also produced fewer instances of respondents choosing the same answer over and over and over again.

Those results collectively suggest that the self-description scale helped reduce acquiescence bias and, more broadly, “that people were considering their answers more thoughtfully” — a primary goal of any survey, Olson said, and one likely to yield more valid data. “For questions that people want to agree with, they have more space to put themselves in the self-description scale as opposed to the agree/disagree scale.”

The disparity in average scores observed on the positive questions was even more evident when analyzing questions that yielded relatively negative responses. In fact, that disparity was so pronounced that Olson and her colleagues interpreted it not as evidence of limiting acquiescence bias, but instead as a byproduct of a related difference between the two scales. The greater range of positive expression granted by the self-description scale’s first four responses — “Completely,” “Mostly,” “Somewhat,” “A Little Bit” — essentially squeezes the negative into a single response, “Not at All,” that rates as a 1. By contrast, the agree/disagree scale offers two negative options: its own 1, “Strongly Disagree,” but also a 2, “Disagree.”

In much the same way that agree/disagree scales seem to inflate positive responses to positive questions, then, self-description scales might be confining negative responses into a cell too small to hold them, artificially dragging down those scores in the process.

Yet even if the self-description scale is not preferable under all conditions — if the two scales are effectively balanced by the nature of the questions — Olson said the study makes a compelling case for putting the self-description scale into wider use. Or, at the very least, for methodologists adopting the same consideration that the scale itself seems to bring out in respondents.

Hopefully, she said, the latter is a point on which her fellow methodologists can strongly agree.

“If you’re asking questions where most people are going to disagree or strongly disagree, then a self-description scale might not be the thing for you. But if you’re asking questions where most of the answers are going to be ‘Agree’ or ‘Strongly Agree,’ and you switch to a self-description scale,” Olson said, “you’ll probably get better data.”