To observe traits that can’t be easily seen, Husker researchers have turned to the wavelengths that can’t be, either.

The University of Nebraska–Lincoln’s Hongfeng Yu, Tian Gao and Harkamal Walia have developed a new imaging system that could help capture the nutritional value of seeds from myriad crops by first capturing the invisible wavelengths reflecting from them.

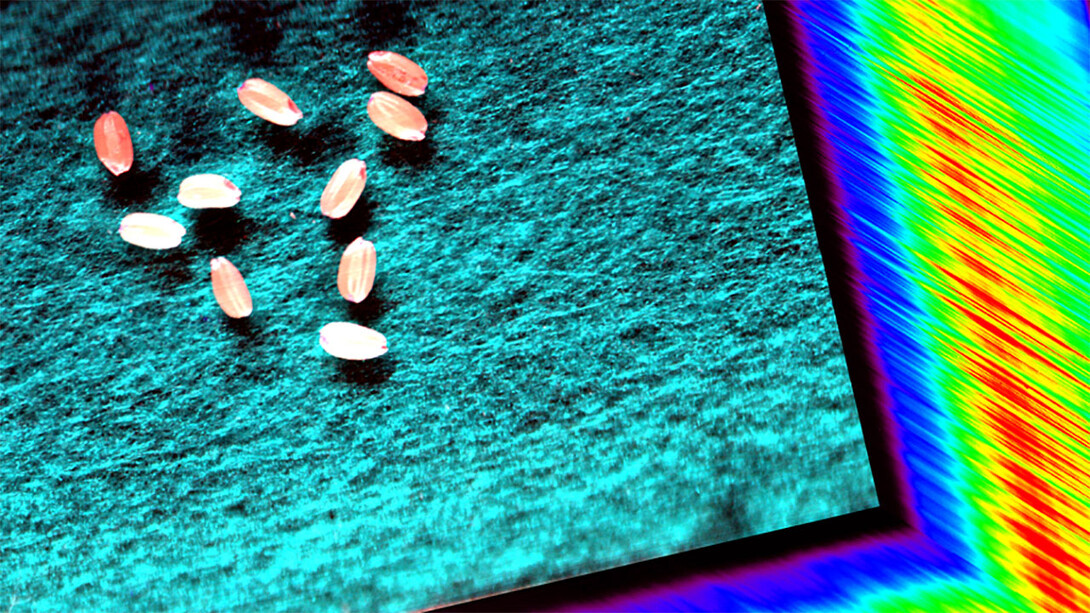

With the ability to scan dozens of seeds in a mere 15 seconds, it’s fitting that the system’s name, HyperSeed, is just one letter removed from the adjective for “extraordinarily fast.” But the system actually owes that name to its hyperspectral camera, whose sight goes beyond the visible bands of red, green and blue wavelengths that are the purview of typical cameras and the human eye.

Unlike those traditional counterparts, HyperSeed’s camera can collect electromagnetic wavelengths that are longer than red, or infrared. Just as an object reflects the visible wavelengths of its color while absorbing all others on the visible spectrum, it can also reflect and absorb infrared wavelengths. Which of those IR wavelengths will reflect toward a hyperspectral camera, and which will get absorbed, depends on the properties of the object — in this case, a seed. That grants the invisible wavelengths a diagnostic value that plant physiologists like Walia are keen to explore.

The researchers see HyperSeed as a tool for rapidly comparing and evaluating seeds produced under arduous conditions by the crop varieties engineered or bred to endure those very conditions, especially heat and drought. That pursuit reflects the status of seeds as the alpha and omega of the agricultural process: the starting points for growing crops, but also endpoints in the form of grains — rice, corn, wheat and many others — that feed a massive proportion of the world’s population.

Maximizing the size and nutritional value of kernels under unfavorable conditions will only gain importance, the researchers said, as global temperatures continue rising, droughts become more severe, and the world population expands to approximately 10 billion people by the year 2050.

“We used more than 200 different wavelengths to scan the same seed, and many of these wavelengths are able to distinguish the biochemical properties of the seed: how well the starch is packaged, how much starch there is, how much other nutrient content there is,” said Walia, professor of agronomy and horticulture. “So this is a stepping stone for us to not only understand the status of a seed and the nutrition that it brings, but also enable us to scan, very quickly, thousands of seeds from different varieties.

“After HyperSeed was up and running, we started to look at grain quality in gene-edited lines that have just a single gene that’s been disrupted, to study the role of this gene in (withstanding) heat stress or drought stress.”

The team’s approach resembles one already employed at the Greenhouse Innovation Center on Nebraska Innovation Campus, where hyperspectral cameras are helping researchers measure plant traits much faster, more precisely and more easily than was once done by hand. But the scale of the greenhouse, and the distance between camera and plant, prevents the high-resolution scanning of seeds and similarly tiny parts.

As computer scientists, Yu and Gao saw an opportunity to address the issue. In collaboration with Walia, they designed a simple hardware setup consisting of a movable, motorized platform that sits beneath a mounted hyperspectral camera, which captures its images with the help of nearby lighting units. Though Walia purchased the hyperspectral camera from a vendor, Yu and Gao custom-built the rest with accessibility and affordability in mind.

“To build a complete solution, including software and hardware from vendors, could be very expensive,” said Yu, associate professor in the School of Computing and interim director of Nebraska’s Holland Computing Center. “Our teams worked together to design the system using materials available online or from local stores, so that the cost is significantly reduced. Each component ranges from a few dollars to a couple of hundred dollars.”

Yu and Gao also customized software that compiles the two-dimensional position and size of the seeds, then layers that data with reflectance signatures from however many wavelengths the camera is set to detect. The open-source software can extract those reflectance signatures on a seed-by-seed or even pixel-by-pixel basis, presenting the data in spreadsheets viewable with conventional programs. It can distinguish among overlapping seeds and classify the seeds by species, too.

To demonstrate one of HyperSeed’s potential applications, the researchers conducted a case study with rice seeds, testing the system’s ability to discern rice grown under optimal temperatures from that grown under heat stress. One of the team’s models, which Yu and Gao developed using machine learning, successfully distinguished between the two groups in 97.5% of cases.

The researchers also identified the wavelengths that demonstrated the most potential for teasing apart the agriculturally and nutritionally relevant traits of seeds. Most of those wavelengths, they found, are between 1,000 and 1,600 nanometers wide, on the near-infrared portion of the electromagnetic spectrum. That knowledge could help researchers make the most of their time and money by focusing their attention on the wavelengths in and around that range.

“It’s one thing to really spend a lot of resources taking images — millions of images, if you want,” Walia said. “It’s also relatively easy these days to process those images, because computing is getting faster. But how do you actually analyze the images so that you can get what you need? That’s one of the things that Hongfeng has pursued with this, is making the information accessible to plant scientists like me, so that we don’t get bogged down with trying to do something that could take us years.”

For all of their strides on the efficiency front, Yu and Gao are now working to modify the system so that it not only captures multiple seeds at a time but can process their reflectance signatures simultaneously, cutting yet more chaff from the process. They’re exploring ways to deploy the software at the Holland Computing Center for the sake of tackling massive images, too. But Yu also said he hopes the system’s open-source software and straightforward hardware give other users “flexible and economical solutions to get the data analysis they want,” with the ability to tailor the system to their specific research questions.

Even in its current form, the system is giving Walia cause to rummage through seeds and experiments from as far back as 2018 — this time, to examine them through the lens of a hyperspectral camera. It’s also stimulating ideas about how to best put that superhuman sight to use. One of those ideas? Cracking open the seeds to get an extreme close-up.

“The surface is great, but what if we had a broken grain and analyzed it face-up?” Walia wondered aloud. “What kind of smile do we get from that?”

The team recently detailed its system in the journal Sensors. Yu, Gao and Walia authored the paper with Anil Kumar Nalini Chandran, a postdoctoral researcher in agronomy and horticulture, and Puneet Paul, a former postdoctoral researcher now with Bayer. The researchers received support from the National Science Foundation, the Agricultural Research Division, and Nebraska’s Office of Research and Economic Development.