“It’s not in the middle of nowhere. It’s in the middle of everything, as Chancellor Green likes to say. Here’s this proof, in fact, that we are in the middle of everything.”

Ken Bloom hails from New Jersey, but he’s speaking of Nebraska, which the Husker professor of physics and astronomy has called home since 2004. As for the proof? It comes partly in the form of a new $51 million grant from the National Science Foundation, which has entrusted Bloom with helping to steward that money toward 19 coast-to-coast institutions — from Caltech and UCLA to MIT and Johns Hopkins — over the next five years.

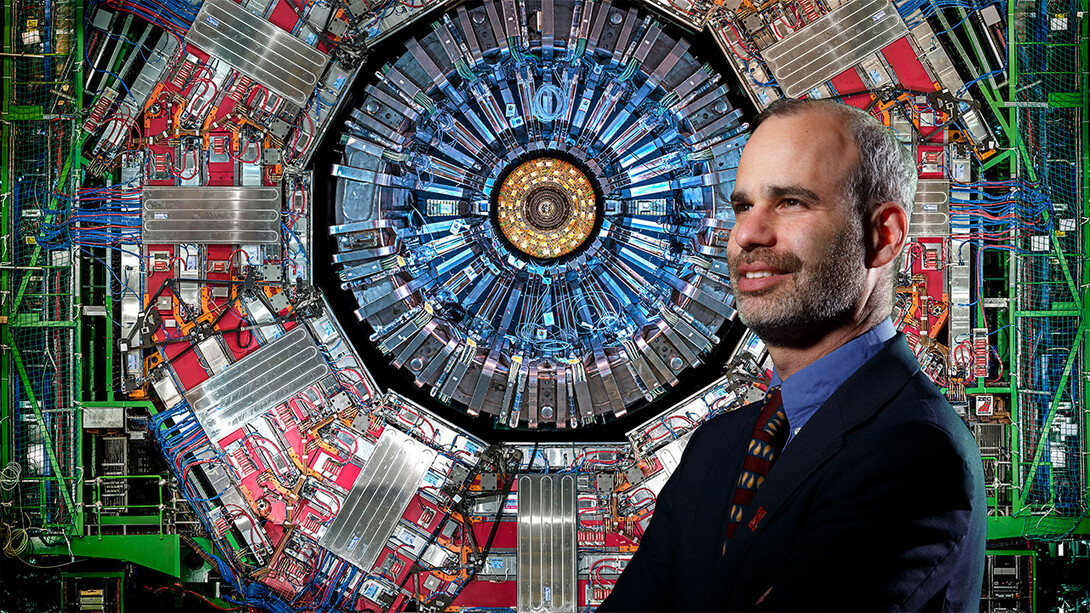

Alongside the University of Nebraska–Lincoln, those institutions will continue the ever-important, never-ending work of maintaining, operating and upgrading the Compact Muon Solenoid, a massive particle detector that particle physicists know as the CMS. That detector is housed at the Large Hadron Collider, a 17-mile-long ring that accelerates particles to nearly the speed of light before smashing them together beneath the border between Switzerland and France.

All of it serves to better understand the subatomic particles — some familiar and stable, others exotic and observable only in the briefest aftermath of particle collisions — that form every thread of the fabric of the known universe. In 2012, experiments at the particle collider confirmed the existence of the long-theorized Higgs boson, which gives other particles their mass.

Nebraska U’s own history of contributions to the Large Hadron Collider date back to the early 1990s, nearly two decades before the particle smashing actually began. It reached a milestone in 2005, when the university’s Holland Computing Center became one of seven Tier-2 computing sites across the United States that store and distribute the inconceivable amounts of data pouring forth from the particle collider.

That same year, Bloom was tasked with managing the U.S. Tier-2 grid. In 2015, he was appointed software and computing manager for the U.S. CMS Operations Program, which is jointly coordinated by the U.S. Department of Energy and the National Science Foundation. And in early 2021, Bloom assumed the role of deputy manager of operations for the project. With that title now comes the responsibility of overseeing and shepherding the National Science Foundation’s latest funding of the CMS detector.

But the university’s center-of-everything mantra extends beyond just the NSF funding. The breadth of Nebraska’s contributions to the CMS Operations Program sets it apart even from the 19 peers receiving portions of the $51 million. Nebraska is the only institution to simultaneously:

- Receive funding from the NSF’s Elementary Particle Physics Program, which supports student and postdoctoral research on data coming from the CMS

- Operate a computing site in the Tier-2 network, which Bloom described as one of the NSF’s “flagship contributions” to the CMS

- Build and help install NSF-funded upgrades to CMS components, some of which are currently being constructed in Jorgensen Hall

- Upgrade software to handle the doubling of particle-collision data that will commence in 2022, then increase again before the end of the decade

Bloom recently took a brief break from his responsibilities with the CMS Operations Program to chat with Nebraska Today about why those operations are so vital, the discoveries they might key in the years to come, and the non-physics lessons the world could learn from the Large Hadron Collider.

What do operations look like in the context of the CMS? How will the newest round of NSF funding contribute to those operations?

I think that when many people imagine what researchers do on CMS, they are thinking about the measurements that we do, the papers that professors and students and postdoctoral researchers are publishing. And we do a lot of that: The CMS collaboration has published more than 1,000 papers since the Large Hadron Collider turned on in 2009, and we’ve learned a lot of particle physics from that.

But those are the last steps of the process. First, you have record and process the data. That means operating a scientific instrument the size of a building, a 100-megapixel camera that records images of particle collisions at a rate of 40 megahertz — 40 million times a second. That instrument has to be continually operated, maintained, sometimes repaired, sometimes upgraded. And then all of the data needs to be stored, processed, moved around and made accessible to scientists all over the world. This is operations: the things that underlie all the research we do. A successful research program has to be built on a successful operations program.

I’m part of the leadership team that looks after that and tries to make sure that we’re using resources efficiently, getting ahead of problems before they might occur, and doing our best to serve physicists in the U.S. who depend on this work to do their science.

One of the project’s ongoing priorities is to use the discovery of the Higgs boson as a means of making other discoveries. How are particle physicists going about that?

We spent 50 years wondering whether there actually was a Higgs boson, and in 2012, we discovered that, indeed, there was. Now you can start exploring the properties of the Higgs and see what you can learn from that. The Standard Model of particle physics specifies all of the properties of the Higgs except for its mass (and thus some properties that depend on the mass). Now that we’ve observed the Higgs and measured its mass, we can test whether all of those predictions are correct.

There are some perfectly plausible theories of new physics that predict small deviations of Higgs properties from the predictions of the Standard Model, even as small as 1%. You need a huge number of Higgs bosons to make measurements of sub-1% precision and test those theories. Now, we’re not going to get enough Higgs bosons in the next five years to do that, but we’ll still make more and more accurate measurements, and if we find anything that’s not in line with expectations, we’ll know that something else is going on. This is a surefire activity at the Large Hadron Collider over the next few years; we know that there are Higgs bosons, we know that we’ll keep accumulating them, and we’ll be prepared for any surprises.

It’s estimated that roughly one-quarter of the universe consists of still-mysterious dark matter. How might the CMS help advance our understanding of it?

We know that there is dark matter in the universe, because we can look up in the skies and see galaxy rotation curves that don’t agree with the amount of luminous matter in them, and so forth. But we have no idea what dark matter is made of, what its particle content is.

The hope is that we could potentially generate dark matter in the laboratory — that in these high-energy collisions, in which you’re making new particles, that some of these might be dark matter particles. Now, they’re going to be hard to detect, because by their very nature, they don’t interact with other matter, like that in our detector, except gravitationally. But then that’s reflected in the apparent non-conservation of energy and momentum; we’d see some sort of energy imbalance that doesn’t look right, and it’s because something escaped that we didn’t detect.

There’s absolutely no guarantee that we’ll be able to produce dark matter in the laboratory. It might not even be possible at the Large Hadron Collider. But it’s an opportunity, we have to try, and that’s going to be a very active part of our physics program in the coming years.

Another CMS-related priority going forward is to “explore the unknown.” How so?

As we’re looking at the highest-energy particle collisions that can be achieved in a laboratory environment, this is our best chance to find something really unexpected. There are lots of theories of new physics out there, and we can test them in our data. Most likely we’ll rule them out, or partially rule them out, or constrain them. But we might get lucky, and you can’t know if you don’t look.

The Large Hadron Collider experiments are very broadband science; we’re trying to record a very inclusive set of particle collisions that allow us to ask a very broad set of questions about it. My favorite metaphor for this is the U.S. Census, in which a huge team of people with a diverse set of skills acquires a really big data set about the country. Then teams of researchers can use that broad data set to investigate problems that are interesting to them. That’s what we do here, too.

Better understanding the fabric of reality seems, by itself, a worthy enough goal. But what sorts of practical applications do you think might emerge, even indirectly, from the work being done at the Large Hadron Collider?

In the end, this is basic research, and you can’t know in advance what might come out of it; that’s one of the reasons that you do it. We always have to mention the World Wide Web, which was invented at CERN. It’s something that particle physicists made for particle physics purposes, to easily share information among a geographically distributed group of people. Now, the whole world eats lunch off the web. You can’t know what’s going to become a big economic driver in the future.

The Large Hadron Collider is a very high-radiation environment, and we need to work with materials and electronics that can withstand large radiation doses and still function. We push the limits on that technology.

We are by no means alone on this, but on the software and computing side, we do a lot of work in the area of machine learning and artificial intelligence. We are looking for sets of events that are very subtly different than others, and that’s a great environment for artificial intelligence. Now, the corporate world probably has a lot more resources that they can throw at this than we do, but we attract talent into this area by having scientifically interesting problems. We have people who are interested in using the latest, greatest tools to solve these problems, and you also learn more about the tools while you’re at it.

We’re also training people who become experts in these tools. There’s a big training aspect in particle physics. Not everyone who is working in the field will stay in it forever; many of them will head off into other areas and bring their technical and collaborative skills with them. Businesses say that they want skilled workers who can collaborate in teams. Particle physicists are those kinds of people.

What most excites you about the future of the CMS and Nebraska’s role in it?

I’m always excited about the creativity and expertise that people bring to the problems of particle physics. My colleagues have so many clever ideas for how to build instruments, how to use computing more efficiently, how to get more information out of the data. It’s really neat to see how much talent we have in the collaboration, and how they apply it to these problems.

And beyond physics, per se, there’s the fact that we successfully run an international collaboration of this size — that you can get 3,000 people to do something, 3,000 people who are spread all over the world, speaking different languages, coming from different cultures, but committed to the goal of trying to understand the universe a little better. In a time when there is so much conflict in the world, it’s heartening to see that we can do this project successfully. I think that we are a model for the world for how people can work together and get things done.